If you read Part 1, you know that we go fast, just like Ricky Bobby.

The key to our speed?

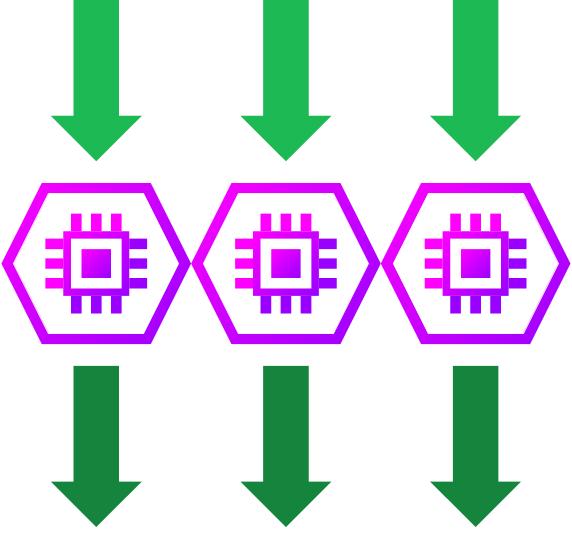

Parallel processing.

But let’s set the stage first.

Imagine you own a newspaper delivery service. You deliver newspapers by car.

Business is booming and you start expanding your business. You hire more employees. But now you need to decide how to get these employees to houses. You can either:

- buy a bigger car to fit more employees, or

- buy more cars and give each employee a car.

This is the decision to scale vertically or to scale horizontally.

Both choices would work fine at limited scale, and maybe you needed a new, good-looking car for yourself anyways. But ask yourself this, what happens when you need to hire 10 more employees? 20 employees? 50 employees?

If you decided to scale vertically, you’d end up with the longest limousine in the world as your company car. Definitely eye catching – but not the most practical vehicle for delivering newspapers.

In the music streaming business, things are a bit different. Things are a lot faster. Thanks to you, the streamer, Beatdapp needs to deliver one million “newspapers” per second.

That would be one long limousine…

Fortunately, we can get computers to process these deliveries for us – so a limousine, or anything on wheels for that matter, was out of the question.

But Beatdapp still had to make the decision between vertical or horizontal scaling. We could:

- vertically scale our processor by adding more CPU and RAM, or,

- horizontally scale by just adding more processors.

Well, with a little encouragement from the wise words of Ricky Bobby, we saw only one option, horizontal scaling.

Now before we talk about our bread and butter, parallel processing, let’s rewind for a second back to our newspaper example:

If you scaled horizontally, how would you make sure your delivery drivers don’t deliver to the same house twice?

Well we didn’t explicitly mention this, but, if you had a booming newspaper business, cell phones probably didn’t exist yet. So your drivers were out of luck if they had to communicate what houses they’d already been to.

How about just give each delivery driver a list of streets to deliver to? Let each driver deliver to their assigned streets, clock out, and go home. That solves it, right?

Actually yes it does. No trick questions here.

We do the same with our processors. We assign each processor a partition of all incoming streams and each processor can chug away without having to know what the other processors are doing.

This means that when higher throughput capacity is needed, say when a popular artist drops a new album, we can instantly deploy more processors and have them work side-by-side seamlessly.

That is called parallel processing and it’s why Beatdapp can outpace the throughput of worldwide streaming.